Learning Docker from Zero. My Cheatsheet explained.

Important Note: This is a under development post. I’m adding notes of what i have been learning of Docker. Most of this content are text snippets taken from Docker Official Documentation. Last Updated: 2022-06-12.

What is a container?

Definition #1 – High Level

A container is a standard unit of software that packages up code and all its dependencies so the application runs quickly and reliably from one computing environment to another.

Available for both Linux and Windows-based applications, containerized software will always run the same, regardless of the infrastructure. Containers isolate software from its environment and ensure that it works uniformly despite differences for instance between development and staging.

Source: https://www.docker.com/resources/what-container/

Definition #2 – Low Level

A container is a sandboxed process on your machine that is isolated from all other processes on the host machine. That isolation leverages kernel namespaces and cgroups, features that have been in Linux for a long time. Docker has worked to make these capabilities approachable and easy to use.

To summarize, a container:

– Is a runnable instance of an image. You can create, start, stop, move, or delete a container using the DockerAPI or CLI.

– Can be run on local machines, virtual machines or deployed to the cloud. is portable (can be run on any OS)

– Is portable (can be run on any OS)

– Containers are isolated from each other and run their own software, binaries, and configurations.

Source: https://docs.docker.com/get-started/

What is a container image?

A Docker container image is a lightweight, standalone, executable package of software that includes everything needed to run an application: code, runtime, system tools, system libraries and settings.

Container images become containers at runtime and in the case of Docker containers – images become containers when they run on Docker Engine.

https://www.docker.com/resources/what-container/

When running a container, it uses an isolated filesystem. This custom filesystem is provided by a container image. Since the image contains the container’s filesystem, it must contain everything needed to run an application – all dependencies, configuration, scripts, binaries, etc. The image also contains other configuration for the container, such as environment variables, a default command to run, and other metadata.

Source: https://docs.docker.com/get-started/

Docker File Basic Commands

The FROM instruction initializes a new build stage and sets the Base Image for subsequent instructions. As such, a valid Dockerfile must start with a FROM instruction.

Example:

FROM python:3

FROM ubuntu:latestWORKDIR

The WORKDIR instruction sets the working directory for any RUN, CMD, ENTRYPOINT, COPY and ADD instructions that follow it in the Dockerfile. If the WORKDIR doesn’t exist, it will be created even if it’s not used in any subsequent Dockerfile instruction

Examples:

WORKDIR /app

RUN

The RUN instruction will execute any commands in a new layer on top of the current image and commit the results. The resulting committed image will be used for the next step in the Dockerfile.

Example:

RUN pip3 install -r requirements.txt #(shell form)

RUN ["pip3", "install", "-r", "requirements.txt"] #(exec form)CMD

There can only be one CMD instruction in a Dockerfile. If you list more than one CMD then only the last CMD will take effect.

The main purpose of a CMD is to provide defaults for an executing container. These defaults can include an executable, or they can omit the executable, in which case you must specify an ENTRYPOINT instruction as well.

Example:

CMD [“python3”, “-m”, “flask”, “run”] #(exec form, this is the preferred form)

CMD python3 -m flask run #(shell form)

EXPOSE

The EXPOSE instruction informs Docker that the container listens on the specified network ports at runtime. You can specify whether the port listens on TCP or UDP, and the default is TCP if the protocol is not specified.

The EXPOSE instruction does not actually publish the port. It functions as a type of documentation between the person who builds the image and the person who runs the container, about which ports are intended to be published. To actually publish the port when running the container, use the -p flag on docker run to publish and map one or more ports, or the -P flag to publish all exposed ports and map them to high-order ports.

Examples:

EXPOSE 5000

EXPOSE 5000/tcp

EXPOSE 5000/udpENV

The ENV instruction sets the environment variable <key> to the value <value>. This value will be in the environment for all subsequent instructions in the build stage and can be replaced inline in many as well.

Examples:

ENV FLASK_APP=rest_api.py

ENV FLASK_RUN_HOST=0.0.0.0

COPY

The COPY instruction copies new files or directories from <src> and adds them to the filesystem of the container at the path <dest>.

Examples

COPY hom* /mydir/

VOLUME

The VOLUME instruction creates a mount point with the specified name and marks it as holding externally mounted volumes from native host or other containers.

FROM ubuntu RUN mkdir /myvol RUN echo "hello world" > /myvol/greeting VOLUME /myvol

Docker File Others Commands

ADD

The ADD instruction copies new files, directories or remote file URLs from <src> and adds them to the filesystem of the image at the path <dest>.

Examples:

ADD test.txt /absoluteDir/ ADD --chown=55:mygroup files* /somedir/ ADD --chown=bin files* /somedir/ ADD http://example.com/foobar /

ENTRYPOINT

An ENTRYPOINT allows you to configure a container that will run as an executable. Run a simple command.

Examples:

ENTRYPOINT ["executable", "param1", "param2"]

Understand how CMD and ENTRYPOINT interact

Both CMD and ENTRYPOINT instructions define what command gets executed when running a container. There are few rules that describe their co-operation.

- Dockerfile should specify at least one of

CMDorENTRYPOINTcommands. ENTRYPOINTshould be defined when using the container as an executable.CMDshould be used as a way of defining default arguments for anENTRYPOINTcommand or for executing an ad-hoc command in a container.CMDwill be overridden when running the container with alternative arguments.

Example of a good use of ENTRYPOINT instead of CMD or Both:

FROM debian:buster-slim

LABEL maintainer="Manuel Castellin"

RUN apt-get update \

&& apt-get install --no-install-recommends -qqy \

dnsutils \

&& rm -rf /var/lib/apt/lists

ENTRYPOINT ["dig"]This is a container used as an cli utility to run dig command. In this escenario the command “dig” in ENTRYPOINT won’t be overrided when this container is run by cli.

Example from: https://github.com/mcastellin/yt-docker-tricks-examples/blob/main/entrypoint-vs-cmd/01-cli-utility/Dockerfile

This is great video to understand the differences and when to use each one.

LABEL

The LABEL instruction adds metadata to an image.

Example:

LABEL "com.example.vendor"="ACME Incorporated" LABEL com.example.label-with-value="foo" LABEL version="1.0" LABEL description="This text illustrates

USER

The USER instruction sets the user name (or UID) and optionally the user group (or GID) to use when running the image and for any RUN, CMD and ENTRYPOINT instructions that follow it in the Dockerfile.

Examples:

USER <user>[:<group>] USER malvarez

Docker CLI Basic Commands

To build a docker container image from a Dockerfile:

docker build -t <image-name:TAG> <DockerFile-location>To list all the docker images in Docker Engine running on your server

docker image ls

docker imagesTo create a tag for an image:

docker tag python-docker:latest python-docker:v1.0.0To run an instance of a docker container image

docker run -dp 3000:3000 --name <name> <image-name>To list all runing docker containers

docker psTo list all Running and Stopped docker containers

docker ps -aTo stop, start and restart docker containers

docker stop <container-id>docker start <container-id>docker restart <container-id>To remove stoped docker container

docker rm <container-id>To Stop and remove container in one command.

docker rm -f <container>Delete an image

docker image rm <image-name>docker rmi <image-name>Watch Container logs

docker logs <container-name>docker logs -f <container-name>Docker Containers Operations commands

In case you’re curious about the command, we’re starting a bash shell and invoking two commands (why we have the &&). The first portion picks a single random number and writes it to /data.txt. The second command is simply watching a file to keep the container running.

Run a container from “ubuntu” image and run the commands “shuf -i 1-10000 -n 1 -o /data.txt && tail -f /dev/null” with bash

docker run -d ubuntu bash -c "shuf -i 1-10000 -n 1 -o /data.txt && tail -f /dev/null"Run a container from “node:12-alpine” image:

docker run -d \

-p 3000:3000 \ # Port Expose

-w /app # Create and set Working Directory

-v "$(pwd):/app" \ # Bind Mount

node:12-alpine \

sh -c "yarn install && yarn run dev" # Run this commands using SHExectute commands in a container

docker exec <container-id> cat /data.txtRun bash shell in a Linux based container

docker exec -it <container-id> bashRun and Start the ubuntu container, and stop it after executing the “ls /” command

docker run -it ubuntu ls /Share containers using GitLab Container Registry

Login to the GitLab Registry using your GitLab Username and Password:

docker login registry.gitlab.comCreate the container with Gitlab URL as name:

docker build -t registry.gitlab.com/michadom/devnet-expert:<TAG_NAME> .Push and pull the container to GitLab

docker push registry.gitlab.com/michadom/devnet-expert:>TAG_NAME>docker pull registry.gitlab.com/michadom/devnet-expert:>TAG_NAME>Docker Volumes

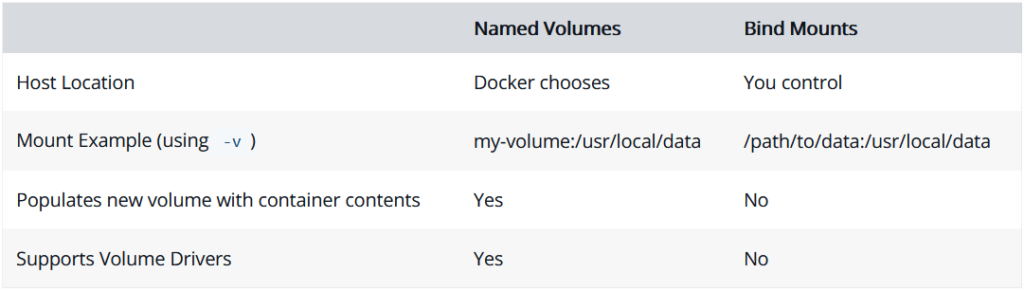

Named Volume

Create a volume

docker volume create todo-dbStart the todo app container, but add the -v flag to specify a volume mount. We will use the named volume and mount it to /etc/todos, which will capture all files created at the path

docker run -dp 3000:3000 -v todo-db:/etc/todos getting-startedA lot of people frequently ask “Where is Docker actually storing my data when I use a named volume?” If you want to know, you can use the docker volume inspect command.

docker volume inspect todo-dbList all create volumes

docker volume lsBind Mount

With bind mounts, we control the exact mountpoint on the host. We can use this to persist data, but it’s often used to provide additional data into containers. When working on an application, we can use a bind mount to mount our source code into the container to let it see code changes, respond, and let us see the changes right away.

Using bind mounts is very common for local development setups. The advantage is that the dev machine doesn’t need to have all of the build tools and environments installed. With a single docker run command, the dev environment is pulled and ready to go. We’ll talk about Docker Compose in a future step, as this will help simplify our commands (we’re already getting a lot of flags).

Multi container apps

Up to this point, we have been working with single container apps. But, we now want to add MySQL to the application stack. The following question often arises – “Where will MySQL run? Install it in the same container or run it separately?” In general, each container should do one thing and do it well. A few reasons:

- There’s a good chance you’d have to scale APIs and front-ends differently than databases

- Separate containers let you version and update versions in isolation

- While you may use a container for the database locally, you may want to use a managed service for the database in production. You don’t want to ship your database engine with your app then.

- Running multiple processes will require a process manager (the container only starts one process), which adds complexity to container startup/shutdown.

Container networking

Remember that containers, by default, run in isolation and don’t know anything about other processes or containers on the same machine. So, how do we allow one container to talk to another? The answer is networking.

Create the network.

docker network create todo-appStart a MySQL container and attach it to the network.

docker run -d \

--network todo-app

--network-alias mysql \

-v todo-mysql-data:/var/lib/mysql \

-e MYSQL_ROOT_PASSWORD=secret \

-e MYSQL_DATABASE=todos \

mysql:5.7docker run -dp 3000:3000 \

-w /app -v "$(pwd):/app" \

--network todo-app \

-e MYSQL_HOST=mysql \

-e MYSQL_USER=root \

-e MYSQL_PASSWORD=secret \

-e MYSQL_DB=todos \

node:12-alpine \

sh -c "yarn install && yarn run dev"Docker Compose

Docker Compose is a tool that was developed to help define and share multi-container applications. With Compose, we can create a YAML file to define the services and with a single command, can spin everything up or tear it all down.

At the root of the app project, create a file named docker-compose.yml

version: "3.7"

services:

app:

image: node:12-alpine

command: sh -c "yarn install && yarn run dev"

ports:

- 3000:3000

working_dir: /app

volumes:

- ./:/app

environment:

MYSQL_HOST: mysql

MYSQL_USER: root

MYSQL_PASSWORD: secret

MYSQL_DB: todos

mysql:

image: mysql:5.7

volumes:

- todo-mysql-data:/var/lib/mysql

environment:

MYSQL_ROOT_PASSWORD: secret

MYSQL_DATABASE: todos

noa-nam:

build:

context: ./NetworkAbstraction/docker-config/

image: noa-network-abstraction:latest

container_name: noa-nam

hostname: noa-nam

environment:

- POSTGRES_USER=postgres

- POSTGRES_PASSWORD=postgres

- DEVELOPMENT=True

- C_FORCE_ROOT=True

networks:

- noa-network

expose:

- "5000"

ports:

- 5000:5000

volumes:

- ./NetworkAbstraction:/root/NetworkAbstraction

- ./NetworkAbstraction/logs:/var/log/NetworkAbstraction

restart: unless-stopped

volumes:

todo-mysql-data:

networks:

noa-network:

driver: bridge

name: noa-network

ipam:

driver: default

config:

- subnet: 10.0.0.0/24docker-compose up -ddocker-compose logs -fdocker-compose logs -f appDocker Compose Links

inks allow you to define extra aliases by which a service is reachable from another service. They are not required to enable services to communicate – by default, any service can reach any other service at that service’s name. In the following example, db is reachable from web at the hostnames db and database:

version: "3.9"

services:

web:

build: .

links:

- "db:database"

db:

image: postgres

Docker Ignore File

Before the docker CLI sends the context to the docker daemon, it looks for a file named .dockerignore in the root directory of the context. If this file exists, the CLI modifies the context to exclude files and directories that match patterns in it. This helps to avoid unnecessarily sending large or sensitive files and directories to the daemon and potentially adding them to images using ADD or COPY.

The CLI interprets the .dockerignore file as a newline-separated list of patterns similar to the file globs of Unix shells. For the purposes of matching, the root of the context is considered to be both the working and the root directory. For example, the patterns /foo/bar and foo/bar both exclude a file or directory named bar in the foo subdirectory of PATH or in the root of the git repository located at URL. Neither excludes anything else.

If a line in .dockerignore file starts with # in column 1, then this line is considered as a comment and is ignored before interpreted by the CLI.

Here is an example .dockerignore file:

# comment */temp* */*/temp* temp?

Check Docker System Storage

docker system df

Delete Everyting: Images, Volumes, Networks, Containers and Build Cache

docker system prune -a --volumes

Useful Container List in DockerHub

mysql