Computer Virtualization. Virtual Machines (VMs)

Introduction

In recent years, a whole evolution in data networks has begun, which is driving, at the same time, that network engineers evolve at an accelerated rate. Network Programmability, Network Automation, Software Defined Networks, Virtualized Network Functions, are just some of the big topics that are heard today. And all of this evolution has had a big push in one way or another thanks to virtualization. Let’s pay attention since from here we will begin a process of learning new concepts that must be made clear to understand what this is all about.

What are Virtual Machines?

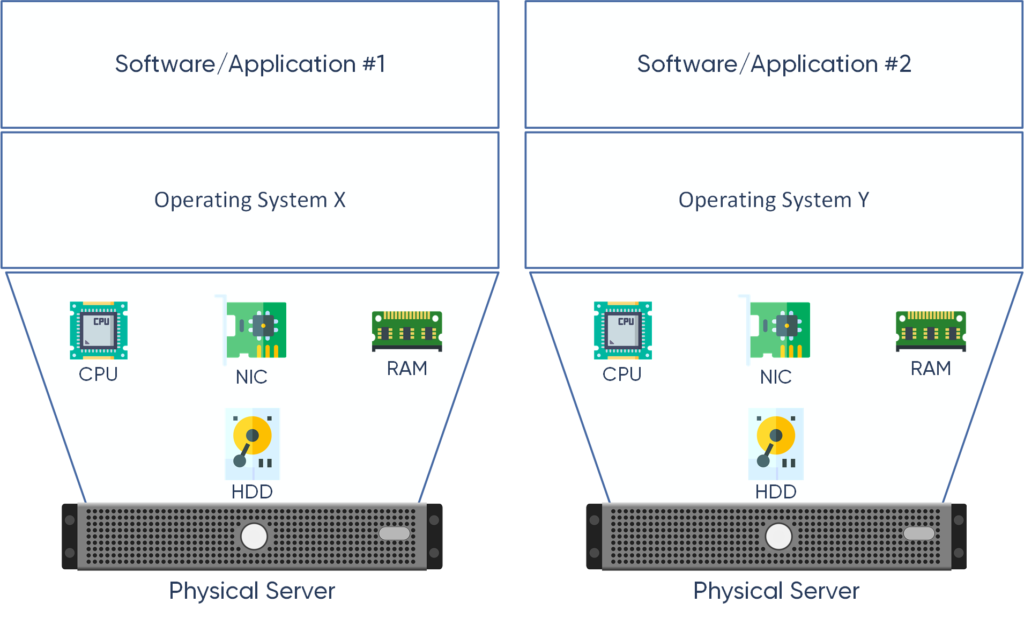

Let’s start by defining the virtualization of computers. Computer virtualization is the process of bringing software to a computer, all of its resources, memory, storage devices, CPUs are converted into software. In other words, it is the abstraction of a computer’s resources, allowing these resources to be manipulated like any other software. The result of this process is the creation of a virtual machine, known in English as “Virtual Machine (VM)”.

One of the best definitions of a virtual machine is described in the official documentation of one of the major virtualization companies, VMWare. Below I translate and share:

“A virtual machine is a software computer that, like a physical computer, runs an operating system and applications. The virtual machine is made up of a set of specifications and configuration files and is compatible with the physical resources of the host. Each virtual machine has virtual devices that provide the same functionality as physical hardware and have additional benefits in terms of portability, manageability and security. “

Another definition that strengthens the previous one is that a virtual machine is a software version of a computer or server. An exact copy of the physical server in question Operating System (OS) and resources. Multiple machines can be loaded on the same physical server and their resources (memory, CPU and hard disk) can be modified according to the needs of the application. They are not limited to an OS. All the different OSs that exist can be run on these VMs, since each is independent of the other.

Taking as an example the case of a server, it could only handle a single operating system and in many cases a single application. With the development of virtualization it was possible to reserve resources from a PC or server to create several virtual machines. How was this possible, what were the reasons that drove companies to virtualization? Let’s keep reading.

How do virtual machines arise?

During the last decades, society has become very dependent on computers to live day to day or to work. In fact, companies have become so dependent on computers, servers, and networks that taking one of these elements out of service, even for a short time, can have a very significant financial impact for the company.

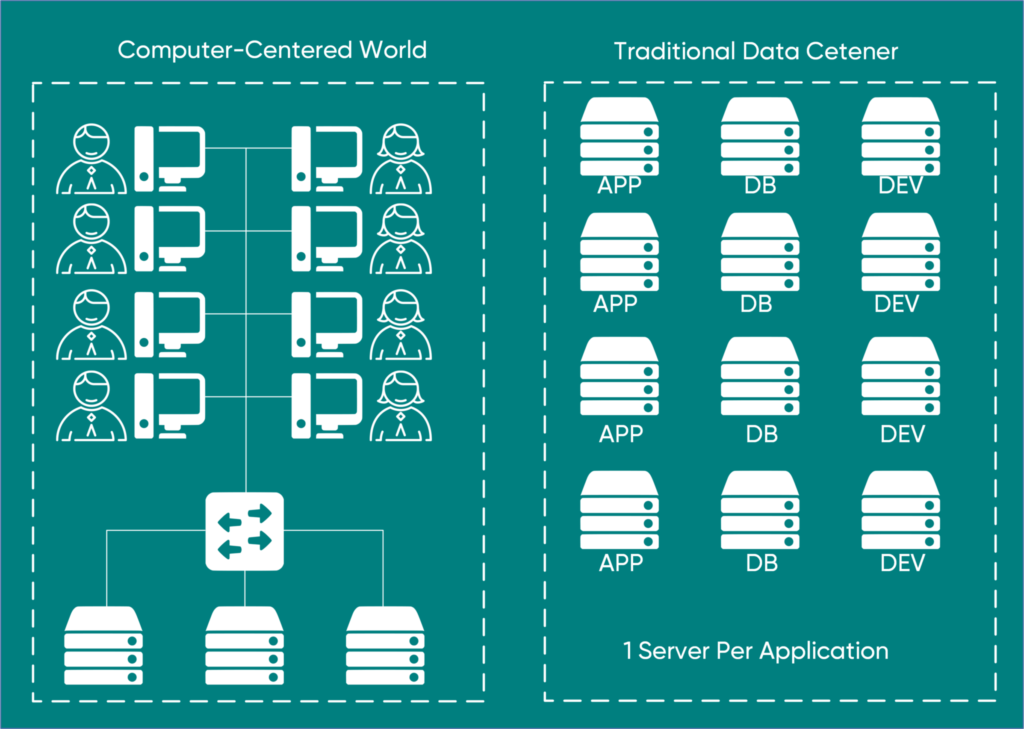

As a result of living in a computer-centric world, companies have to run applications for almost every division of their business, and all of these applications run on servers. In general, these applications require one or more dedicated servers (Application, Database, Test Environments, Backups, Development, etc.), which do not use their maximum capacity. As you can imagine, this growth in applications brings with it an implementation and operational expense, situations that were becoming major problems and headaches for companies.

Main Problems Facing Companies

The main problems the companies were facing were several.

Physical Space Problems

The digital age has been leading to all operations within a company being app dependent. Each application normally required dedicated servers. These dedicated servers at the same time required more physical space. For multinational companies that offer content on the Internet, Web Hosting and other services, it was not scalable in physical space.

Resource underutilization problems on computers

Not only did the applications require dedicated application servers, but these applications did not use 100% of the resources. In a traditional architecture, the different applications that were hosted on different servers consumed less than 50% of the resources.

High energy consumption problems (air conditioning, etc.)

Servers and air conditioning are the major consumers of energy in data centers. The more the company grew, the more the data center grew and the more energy consumption grew, bringing high costs to keep these centers operational 24/7.

Backup Copies Problems

It is known to all that seconds are converted to minutes and minutes to hours when the application that produces profits for a company goes out of service. If a data center accident causes storage drives to become corrupted and restoration from Backups is required, we know this process is not quick and easy. Because even if we have all the data, we must reinstall the Operating System, the applications, the configuration and then load the Backup data. You don’t have to experience it to imagine how complicated and how long it can take.

High Availability and Redundancy Problems

Traditionally, applications that were hosted on physical servers required other physical servers to create a Cluster that would introduce High Availability and Redundancy to solutions and use complex protocols that were monitoring and synchronizing both servers so that in the event of an active server failure, the second could take control.

Hardware Incompatibility Problems

Certain application providers offer their solutions with all hardware installed. It is very common to find in a data center with solutions from multiple manufacturers. It turns out that sometimes you need a spare to change a part of a server and that the parts you have available you cannot use it because they are incompatible with the manufacturer of the server that needs it. You are also required to have the same type of part from multiple manufacturers due to the fact that they are incompatible with each other.

Maintenance and operation

The administration, operation and maintenance of the servers can be complicated as your Data Center grows. It is necessary to hire more staff.

A solution was found for all these problems, virtual machines.

Benefits of virtual machines

Just by knowing the problems that virtual machines came to solve, we can imagine the large number of benefits that arise from their use.

Reduce Costs

The most obvious benefit of VMs is that they reduce the expense of purchasing and maintaining new physical servers for new applications. But in addition, there are the costs associated with energy and cooling due to the high temperatures generated by physical servers.

Less space

In terms of space the reduction is remarkable. The number of racks occupying hundreds of servers can be reduced to two racks with servers running hundreds of highly available virtual machines.

Availability and Flexibility

Other benefits Virtualization offers are High Availability, Flexibility and High fault tolerance. With VMs these cases do not occur anymore, because in case of failures the controller can quickly relaunch a VM on another server or even move, yes, automatically move the machine without creating data loss or face times with applications out of service . The centralized controller handles this by monitoring the VMs as a function of HeartBeat.

This ability to move quickly between servers plays a huge role in Business Continuity and Disaster Recovery. You can move a virtual machine as simple as copying a file. Now consider the following, IT can quickly move critical applications seamlessly from one DataCenter to another in another location without shutting it down or taking it out of service.

Rapid application provisioning

Previously, building, testing, developing, and publishing servers for application developers was a difficult, tedious, and costly task because different testing environments are required for different projects.

Another benefit, no less important, is that it promotes naturally is standardization.

Use of Resources

Since VMs are software, several machines can be installed on 1 physical machine and take advantage of up to 80% of the resources of said server. And not only can they be run on servers, but they can also be suspended or paused and moved to a different physical server whenever you want. You can even make one or multiple copies and run them on multiple servers. All this quick and easy.

The magic that has made computer virtualization a reality is known as Hypervisor.

The Hypervisor

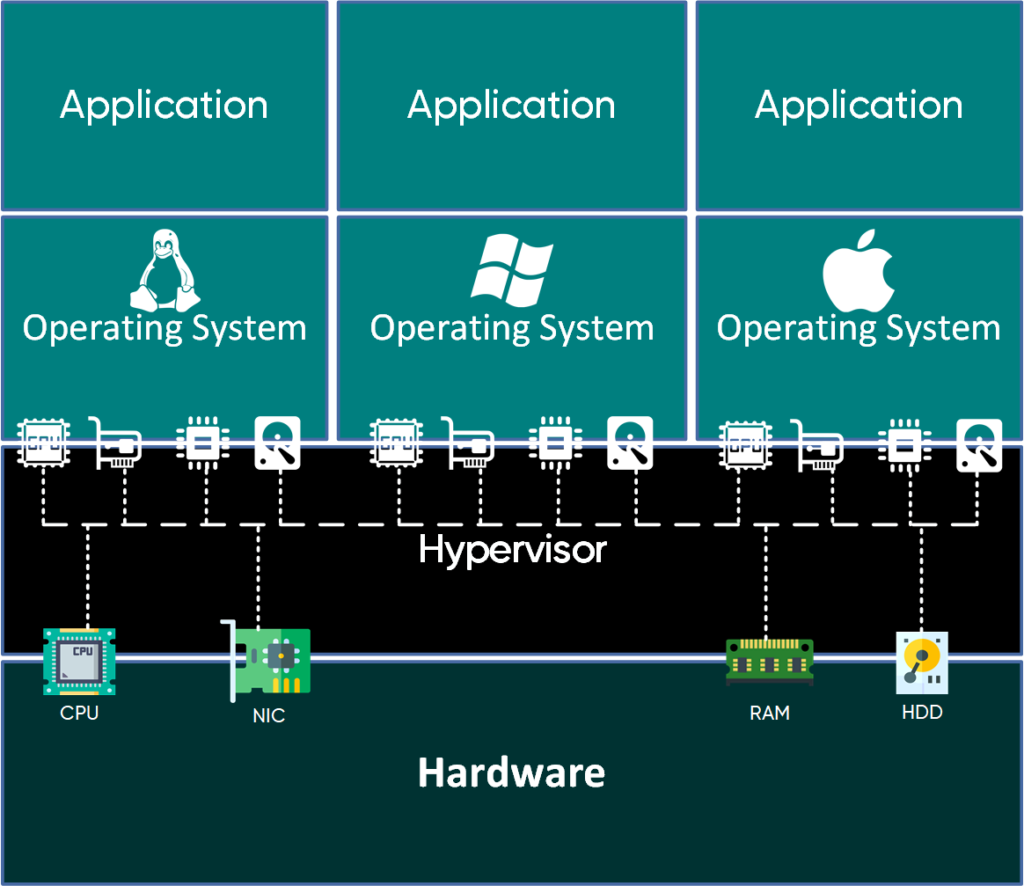

The Hypervisor is a software that is in charge of managing and administering the communication between the virtual resources of the VMs and the physical resources of the server. It is the virtualization control that works as an Operating System of Operating Systems, which manages and monitors the VMs.

As an Operating System it contains the drivers to be able to manage the physical resources, say, the hard disks, the network cards, CPUs and other input and output interfaces. Now, as an Operating System for Operating Systems, it is in charge of creating the machines, monitoring them and connecting the virtual resources assigned to each one with the physical resources.

As a monitor of VMs, it is in charge of correctly managing the communication of all virtual machines, the procedure of the OSs with the physical resources using Multiplexing.

Hypervisors enable the virtualization of computer hardware such as CPU, disk, network, RAM, etc., and allow the installation of guest VMs on top of them. We can create multiple guest VMs with different Operating Systems on a single hypervisor. For example, we can use a native Linux machine as a host, and after setting up a type-2 hypervisor we can create multiple guest machines with different OSes, commonly Linux and Windows.

Hardware virtualization is supported by most modern CPUs, and it is the feature that allows hypervisors to virtualize the physical hardware of a host system, thus sharing the host system’s processing resources with multiple guest systems in a safe and efficient manner. In addition, most modern CPUs also support nested virtualization, a feature that enables VMs to be created inside another VM.

Types of Hypervisors

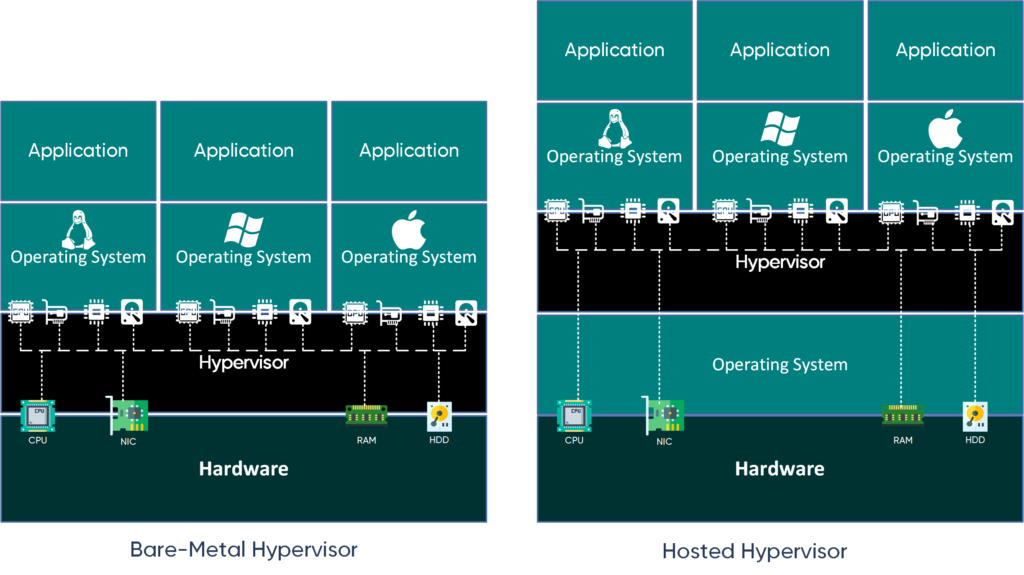

There are two types of hypervisors with an important difference between them:

- Hypervisor in Pure Metal (“Bare-Metal”) or Type 1. This type of hypervisor runs directly on the server Hardware without any type of Native Operating System. The same as software is an Operating System.

- Hypervisor Hosted (“Hosted”) or Type 2. This type of hypervisor runs on top of an Operating System already installed on a server.

Some of the most popular hypervisors are:

Type-1 hypervisor

- AWS Nitro

- IBM z/VM

- Microsoft Hyper-V

- Nutanix AHV

- Oracle VM Server for SPARC

- Oracle VM Server for x86

- Red Hat Virtualization

- VMware ESXi

- Xen.

Type-2 hypervisor

- Parallels Desktop for Mac

- VirtualBox

- VMware Player

- VMware Workstation.

And then there are the exceptions – hypervisors matching both categories, which can be listed redundantly under hypervisors of both type-1 and type-2. They are Linux kernel modules that act as both type-1 and type-2 hypervisors at the same time. Examples are:

- KVM

- bhyve.

Hardware virtualization is supported by most modern CPUs, and it is the feature that allows hypervisors to virtualize the physical hardware of a host system, thus sharing the host system’s processing resources with multiple guest systems in a safe and efficient manner. In addition, most modern CPUs also support nested virtualization, a feature that enables VMs to be created inside another VM.

How to choose which hypervisor to use?

The first consideration when choosing which hypervisor is whether we want it of the Hosted or Bare-Metal type.

Hosted hypervisors are easier to install, but tend to run at less than full capacity due to the added layer added. However, they have greater flexibility, and if you already have servers running a specific operating system, the transition to virtualization is easier.

Native hypervisors typically offer better performance for virtual machines running on them because they are directly connected to hardware. This improved latency response is an important factor, especially in a service model where there may be Service Level Agreements (SLAs) that specify server and application performance.

So the choice will depend on the scenario you are in and the investment in time and money that you would like to make.

Virtualized Data Centers, the next step

Virtualization of Data Centers (“Virtualized Data Centers”), the next step taken with virtual machines.

First of all it is important to understand that Virtualized Data Centers are not a Cloud, but it puts you a few steps away from being one. When we talk about Virtualized Data Centers we are talking about an infrastructure where all the application servers are virtualized. The Cloud is a service infrastructure where the resources of the virtual infrastructure, applications, software, infrastructure as a service are shared. The key word in this difference is Service. Understanding the difference between a Cloud and a Virtualized Data Center is what helps to understand the definition of Cloud.

In practical terms, the benefits of transforming a traditional Data Center into a Virtualized Data Center are:

- Produce Less Heat

- Reduce Hardware Spending

- Faster deployments

- Test and Development Environments are deployed faster.

- Rapid reimplementation of applications

- Backup Copies Made Easier

- Faster Disaster Recovery

- Server Standardization

- Separation of Services

- Easy migration to the Cloud

Conclusion

Be clear that we saw in this publication is an important step to continue understanding and learning about cutting-edge topics such as Clouds and Virtualized Network Functions.

Wait soon for Tutorials on how to install virtual machines using the different types of hypervisors.